|

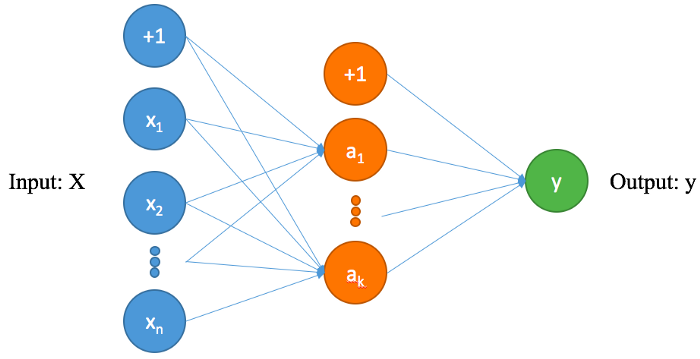

LSTM介紹 機器學習當中最常利用多層感知器(Multi-Layer Perceptron, MLP)來訓練模型,如下圖所示

Keras,LSTM,python,Stock,Prediction

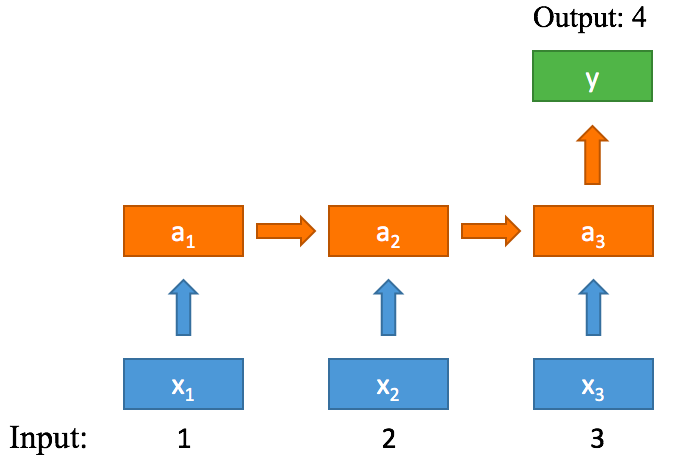

Multi-layer Perceptron而利用MLP的方式並非能處理所有問題,因為他沒辦法處理時序性的問題,例如:當輸入為[1, 2, 3] 希望輸出4 ,而當輸入[3, 2, 1] 時希望輸出0 ,對於MLP來說,[1, 2, 3] 和 [3, 2, 1] 是相同的,因此無法得到預期的結果。 因此有了遞歸神經網絡(Recurrent Neural Network, RNN)的出現設計如下圖所示。

Keras,LSTM,python,Stock,Prediction

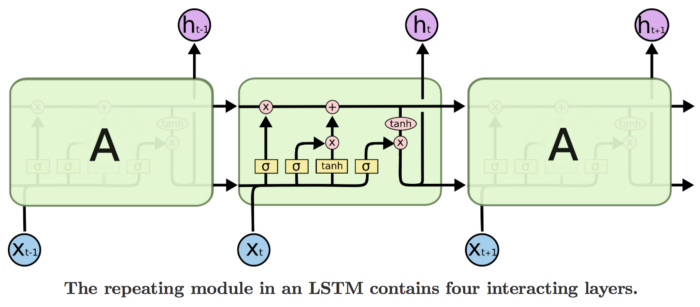

Recurrent Neural Network主要概念是將前面輸入得到的權重(Weight)加入下一層,這樣就可以完成時序性的概念。 而長短期記憶(Long Short-Term Memory, LSTM)是RNN的一種,而其不相同之處在於有了更多的控制單元input gate、output gate、forget gate 示意圖如下。

Keras,LSTM,python,Stock,Prediction

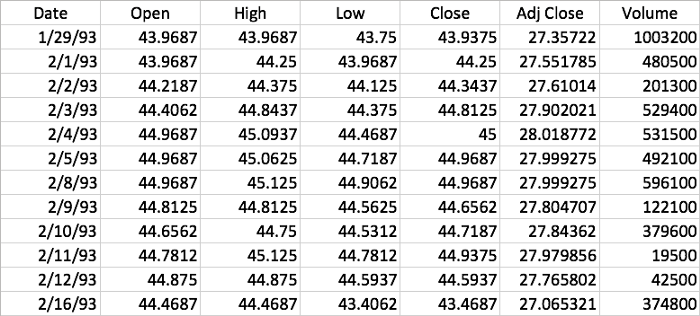

瞭解更多:李弘毅 — ML Lecture 21–1: Recurrent Neural Network (Part I) Stock Prediction為例SPY dataset: Yahoo SPDR S&P 500 ETF (SPY)

Keras,LSTM,python,Stock,Prediction

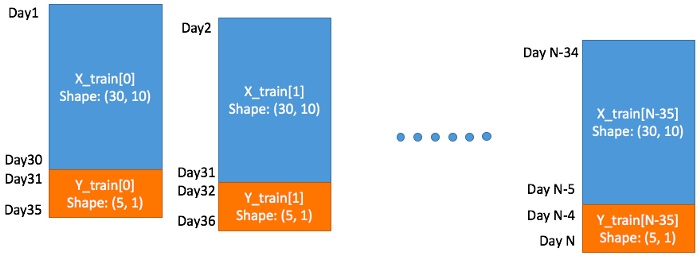

SPY.csv目標:利用過去的資料預測未來幾天的Adj Close 。 資料建置匯入套件將pandas、numpy、keras、matplotlib 匯入 - import pandas as pd

- import numpy as np

- from keras.models import Sequential

- from keras.layers import Dense, Dropout, Activation, Flatten, LSTM, TimeDistributed, RepeatVector

- from keras.layers.normalization import BatchNormalization

- from keras.optimizers import Adam

- from keras.callbacks import EarlyStopping, ModelCheckpoint

- import matplotlib.pyplot as plt

- %matplotlib inline

讀取資料

- def readTrain():

- train = pd.read_csv("SPY.csv")

- return train

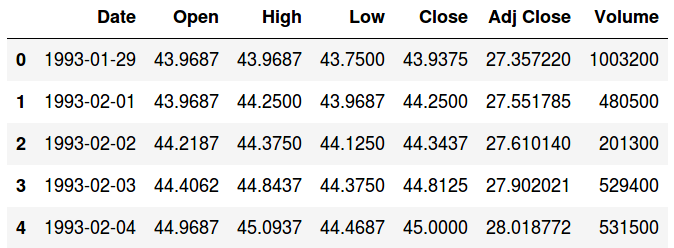

Keras,LSTM,python,Stock,Prediction

Augment Features除了基本資料提供的Features(Open, High, Low, Close, Adj Close, Volume)以外,還可自己增加Features,例如星期幾、幾月、幾號等等。 - def augFeatures(train):

- train["Date"] = pd.to_datetime(train["Date"])

- train["year"] = train["Date"].dt.year

- train["month"] = train["Date"].dt.month

- train["date"] = train["Date"].dt.day

- train["day"] = train["Date"].dt.dayofweek

- return train

Keras,LSTM,python,Stock,Prediction

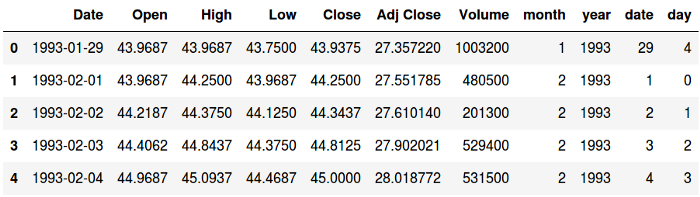

After Augment FeaturesNormalization將所有資料做正規化,而由於Date 是字串非數字,因此先將它drop掉 - def normalize(train):

- train = train.drop(["Date"], axis=1)

- train_norm = train.apply(lambda x: (x - np.mean(x)) / (np.max(x) - np.min(x)))

- return train_norm

Keras,LSTM,python,Stock,Prediction

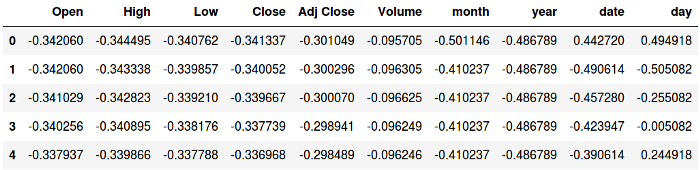

Build Training Data輸入X_train: 利用前30天的Open, High, Low, Close, Adj Close, Volume, month, year, date, day作為Features,shape為(30, 10) 輸出Y_train: 利用未來5天的Adj Close作為Features,shape為(5,1) 我們須將資料做位移的展開作為Training Data,如圖(1)所示。

Keras,LSTM,python,Stock,Prediction

將資料做位移展開,其中N為整份SPY.csv的資料樣本數

- def buildTrain(train, pastDay=30, futureDay=5):

- X_train, Y_train = [], []

- for i in range(train.shape[0]-futureDay-pastDay):

- X_train.append(np.array(train.iloc[i:i+pastDay]))

- Y_train.append(np.array(train.iloc[i+pastDay:i+pastDay+futureDay]["Adj Close"]))

- return np.array(X_train), np.array(Y_train)

- view rawbuildTrain.py hosted with ❤ by GitHub

資料亂序將資料打散,而非照日期排序 - def shuffle(X,Y):

- np.random.seed(10)

- randomList = np.arange(X.shape[0])

- np.random.shuffle(randomList)

- return X[randomList], Y[randomList]

Training data & Validation data將Training Data取一部份當作Validation Data - def splitData(X,Y,rate):

- X_train = X[int(X.shape[0]*rate):]

- Y_train = Y[int(Y.shape[0]*rate):]

- X_val = X[:int(X.shape[0]*rate)]

- Y_val = Y[:int(Y.shape[0]*rate)]

- return X_train, Y_train, X_val, Y_val

因此最後將輸出合併為 - # read SPY.csv

- train = readTrain()

- # Augment the features (year, month, date, day)

- train_Aug = augFeatures(train)

- # Normalization

- train_norm = normalize(train_Aug)

- # build Data, use last 30 days to predict next 5 days

- X_train, Y_train = buildTrain(train_norm, 30, 5)

- # shuffle the data, and random seed is 10

- X_train, Y_train = shuffle(X_train, Y_train)

- # split training data and validation data

- X_train, Y_train, X_val, Y_val = splitData(X_train, Y_train, 0.1)

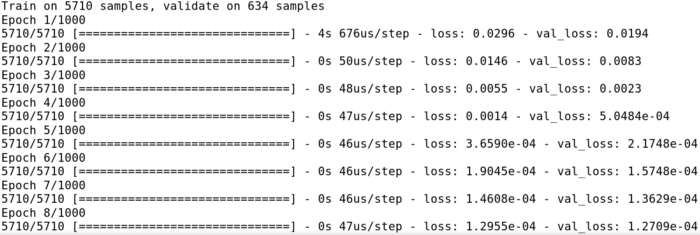

- # X_trian: (5710, 30, 10)

- # Y_train: (5710, 5, 1)

- # X_val: (634, 30, 10)

- # Y_val: (634, 5, 1)

模型建置

Keras,LSTM,python,Stock,Prediction

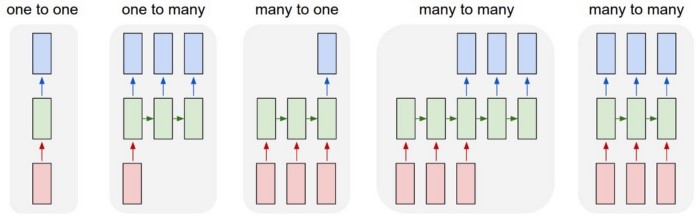

Multiple models比較many to one以及many to many

Keras,LSTM,python,Stock,Prediction

一對一模型由於是一對一模型,因此return_sequences 也可設為False ,但Y_train 以及Y_val的維度需改為二維(5710,1)以及(634,1) 。

- def buildOneToOneModel(shape):

- model = Sequential()

- model.add(LSTM(10, input_length=shape[1], input_dim=shape[2],return_sequences=True))

- # output shape: (1, 1)

- model.add(TimeDistributed(Dense(1))) # or use model.add(Dense(1))

- model.compile(loss="mse", optimizer="adam")

- model.summary()

- return model

將過去的天數pastDay設為1,預測的天數futureDay也設為1 - train = readTrain()

- train_Aug = augFeatures(train)

- train_norm = normalize(train_Aug)

- # change the last day and next day

- X_train, Y_train = buildTrain(train_norm, 1, 1)

- X_train, Y_train = shuffle(X_train, Y_train)

- X_train, Y_train, X_val, Y_val = splitData(X_train, Y_train, 0.1)

- # from 2 dimmension to 3 dimension

- Y_train = Y_train[:,np.newaxis]

- Y_val = Y_val[:,np.newaxis]

- model = buildOneToOneModel(X_train.shape)

- callback = EarlyStopping(monitor="loss", patience=10, verbose=1, mode="auto")

- model.fit(X_train, Y_train, epochs=1000, batch_size=128, validation_data=(X_val, Y_val), callbacks=[callback])

由下圖可見變數的使用量

Keras,LSTM,python,Stock,Prediction

最後val_loss: 2.2902e-05 停在第164個Epoch

Keras,LSTM,python,Stock,Prediction

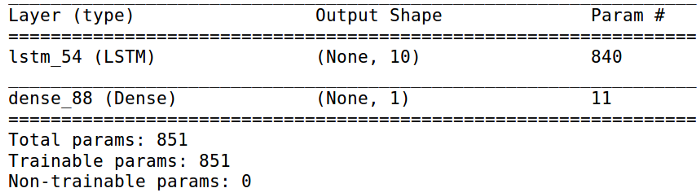

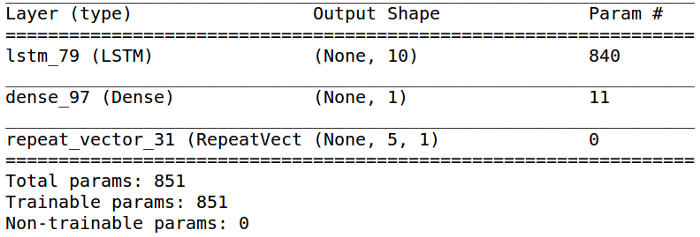

多對一模型LSTM參數return_sequences=False ,未設定時default也為False,而且不可使用TimeDistribution - def buildManyToOneModel(shape):

- model = Sequential()

- model.add(LSTM(10, input_length=shape[1], input_dim=shape[2]))

- # output shape: (1, 1)

- model.add(Dense(1))

- model.compile(loss="mse", optimizer="adam")

- model.summary()

- return model

需要設定的有pastDay=30、future=1 ,且注意Y_train 的維度需為二維

- train = readTrain()

- train_Aug = augFeatures(train)

- train_norm = normalize(train_Aug)

- # change the last day and next day

- X_train, Y_train = buildTrain(train_norm, 30, 1)

- X_train, Y_train = shuffle(X_train, Y_train)

- # because no return sequence, Y_train and Y_val shape must be 2 dimension

- X_train, Y_train, X_val, Y_val = splitData(X_train, Y_train, 0.1)

- model = buildManyToOneModel(X_train.shape)

- callback = EarlyStopping(monitor="loss", patience=10, verbose=1, mode="auto")

- model.fit(X_train, Y_train, epochs=1000, batch_size=128, validation_data=(X_val, Y_val), callbacks=[callback])

由下圖可見變數的使用量

Keras,LSTM,python,Stock,Prediction

最後val_loss: 3.9465e-05 停在第113個Epoch

Keras,LSTM,python,Stock,Prediction

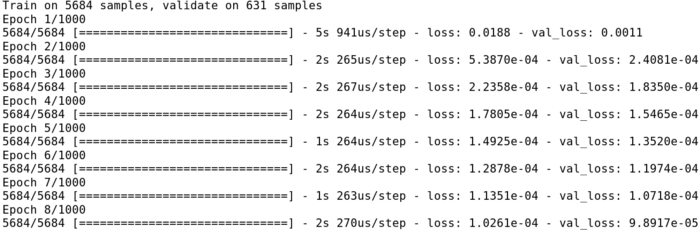

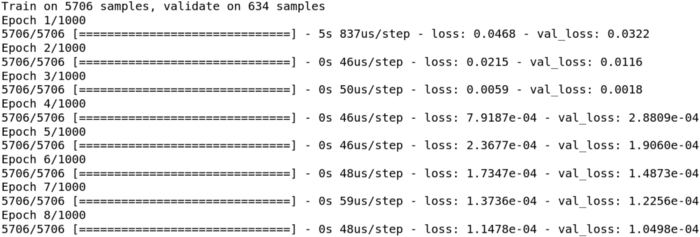

一對多模型因為是一對多模型Timesteps只有1,因此return_sequences=False 才可執行 - def buildOneToManyModel(shape):

- model = Sequential()

- model.add(LSTM(10, input_length=shape[1], input_dim=shape[2]))

- # output shape: (5, 1)

- model.add(Dense(1))

- model.add(RepeatVector(5))

- model.compile(loss="mse", optimizer="adam")

- model.summary()

- return model

將pastDay 設為1, futureDay 設為5 - train = readTrain()

- train_Aug = augFeatures(train)

- train_norm = normalize(train_Aug)

- # change the last day and next day

- X_train, Y_train = buildTrain(train_norm, 1, 5)

- X_train, Y_train = shuffle(X_train, Y_train)

- X_train, Y_train, X_val, Y_val = splitData(X_train, Y_train, 0.1)

- # from 2 dimmension to 3 dimension

- Y_train = Y_train[:,:,np.newaxis]

- Y_val = Y_val[:,:,np.newaxis]

- model = buildOneToManyModel(X_train.shape)

- callback = EarlyStopping(monitor="loss", patience=10, verbose=1, mode="auto")

- model.fit(X_train, Y_train, epochs=1000, batch_size=128, validation_data=(X_val, Y_val), callbacks=[callback])

由下圖可見變數的使用量

Keras,LSTM,python,Stock,Prediction

最後val_loss: 5.6081e-05 停在第163個Epoch

Keras,LSTM,python,Stock,Prediction

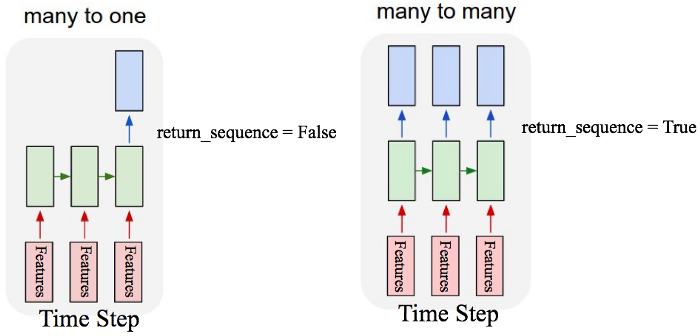

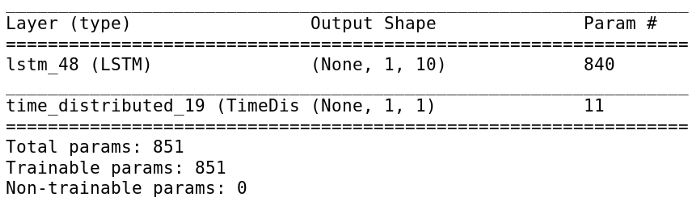

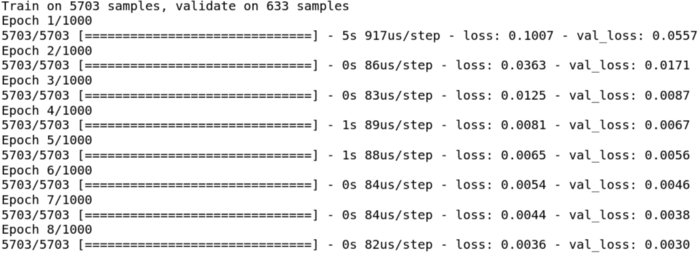

多對多模型 (輸入與輸出相同長度)將return_sequences 設為True ,再用TimeDistributed(Dense(1)) 將輸出調整為(5,1) - def buildManyToManyModel(shape):

- model = Sequential()

- model.add(LSTM(10, input_length=shape[1], input_dim=shape[2], return_sequences=True))

- # output shape: (5, 1)

- model.add(TimeDistributed(Dense(1)))

- model.compile(loss="mse", optimizer="adam")

- model.summary()

- return model

將pastDay 以及futureDay 設為相同長度5

- train = readTrain()

- train_Aug = augFeatures(train)

- train_norm = normalize(train_Aug)

- # change the last day and next day

- X_train, Y_train = buildTrain(train_norm, 5, 5)

- X_train, Y_train = shuffle(X_train, Y_train)

- X_train, Y_train, X_val, Y_val = splitData(X_train, Y_train, 0.1)

- # from 2 dimmension to 3 dimension

- Y_train = Y_train[:,:,np.newaxis]

- Y_val = Y_val[:,:,np.newaxis]

- model = buildManyToManyModel(X_train.shape)

- callback = EarlyStopping(monitor="loss", patience=10, verbose=1, mode="auto")

- model.fit(X_train, Y_train, epochs=1000, batch_size=128, validation_data=(X_val, Y_val), callbacks=[callback])

由下圖可見變數的使用量

Keras,LSTM,python,Stock,Prediction

最後val_loss: 9.3788e-05 停在第169個Epoch

Keras,LSTM,python,Stock,Prediction

文章出處

|